At GiveWell, we’re committed to understanding the impact of our grantmaking and improving our decisions over time. That’s why we’ve begun conducting “lookbacks”—reviews of past grants, typically two to three years after making them, that assess how well they’ve met our initial expectations and what we can learn from them.

We conduct lookbacks for two main reasons: accountability and learning. By examining both the successes and challenges of past grants and publishing those findings on our website, we aim to be transparent about the impact of donor funding. Systematically reviewing past grants also helps us identify ways to improve our decision-making. When lookbacks identify challenges, lower-than-expected impact, or key questions that we think we should have an answer to, we use these findings to adjust our approach to similar grants in the future or prioritize follow-up research. When lookbacks show higher-than-expected impact, that’s valuable, too—in those cases, we made the error of underestimating impact and might be granting too little to certain programs.

Lookbacks compare what we thought would happen before making a grant to what we think happened after at least some of the grant’s activities have been completed and we’ve conducted follow-up research. While we can’t know everything about a grant’s true impact, we can learn a lot by talking to grantees and external stakeholders, reviewing program data, and updating our research. We then create a new cost-effectiveness analysis with this updated information and compare it to our original estimates. There’s a lot we can’t know with certainty—like how many lives were saved because of a grant—but we believe these lookbacks teach us a lot about the quality of our past decisions and how to improve.

Lookbacks at two different scales

We recently completed our first two lookbacks: one reviewed our initial $17 million grant to New Incentives for childhood vaccination incentives in northern Nigeria, and the other reviewed a $7.5 million grant to Helen Keller Intl for vitamin A supplementation expansion in Nigeria. We selected these grants for our initial lookbacks because enough time had passed to observe meaningful outcomes, the findings could inform upcoming grant decisions, and the grants were large or otherwise important to our portfolio.

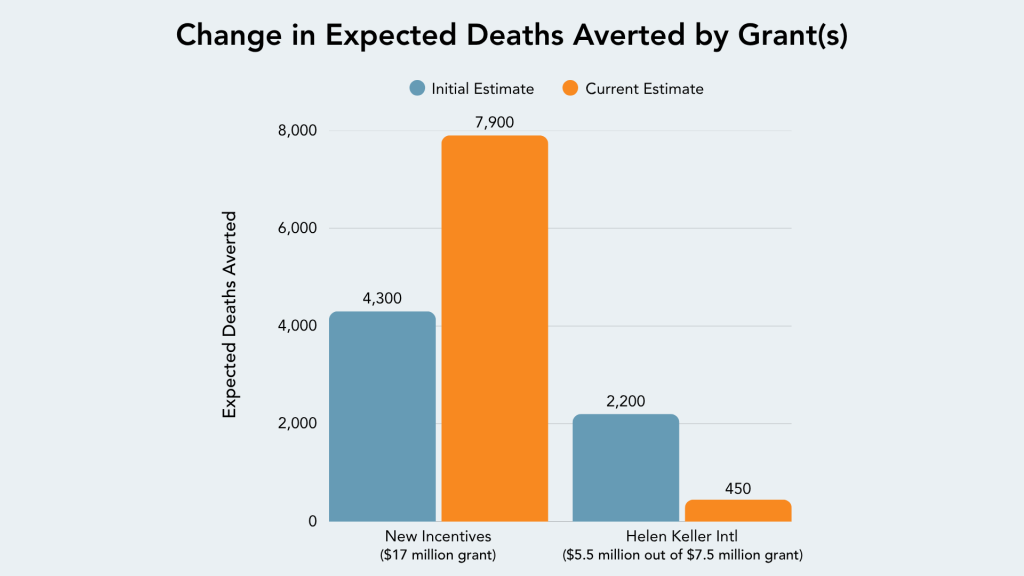

These initial lookbacks showed different outcomes for the two programs. For New Incentives, our best guess is that our $17 million grant averted 7,900 deaths from vaccine-preventable diseases—significantly more than our estimate at the time of making the grant of 4,300 deaths. Our estimate of cost-effectiveness increased because costs per child dropped significantly as New Incentives expanded over time. For Helen Keller Intl, our best guess is that the portion of the grant spent over the first two years of the grant period ($5.5 million spent between mid-2021 and mid-2023) will avert around 450 deaths, lower than our initial projection of 2,200 deaths, because we now think vitamin A deficiency rates in Nigeria are lower and counterfactual coverage rates are higher than we originally estimated.

What did we find?

New Incentives: Conditional cash transfers for vaccination in Nigeria

New Incentives’ conditional cash transfers program aims to increase coverage of routine childhood vaccinations in northern Nigeria by providing small cash incentives to caregivers who bring their children into clinics for vaccinations. Routine vaccination significantly reduces risk of illness and death from vaccine-preventable diseases.

GiveWell has directed over $120 million in grants to support New Incentives’ conditional cash transfer program since it became a Top Charity in 2020. Our lookback focuses on our initial $17 million grant and performance during the years 2021-2023 because we think this allows us to compare actual results from completed programming against our initial expectations.

Here are key takeaways and next steps from our lookback on these grants to New Incentives:

- Cost-effectiveness estimate increased: We now estimate New Incentives is about twice as cost-effective as our initial assessment. When we made our initial grants, we estimated the program was about 11 times our cost-effectiveness benchmark in the original three Nigerian states we funded.1To date, GiveWell has used GiveDirectly’s unconditional cash transfers as a benchmark for comparing the cost-effectiveness of funding opportunities, which we describe in multiples of “cash” (more). In 2024, we reevaluated GiveDirectly’s cash program and now estimate it is three to four times more cost-effective than we’d previously estimated (more). For now, we continue to use our existing benchmark while we evaluate what benchmark we want to use going forward.

Given what we know now, we estimate the grant was approximately 25 times our cost-effectiveness benchmark in those locations. As a result, we estimate that our funding has or will avert around 7,900 deaths, compared to our initial projection of around 4,300. - Substantial cost reduction: The increase in cost-effectiveness was driven by a fall in cost per child enrolled. At the time of the initial grant, we expected New Incentives’ cost per child enrolled to be ~$40, but we now think it was ~$20. We think this is due to economies of scale, efficiency efforts by New Incentives, and the devaluation of the Nigerian naira, but we haven’t prioritized a deep assessment of drivers of cost changes. We plan to look into this in 2025.

Helen Keller Intl: Vitamin A supplementation in Nigeria

Helen Keller Intl’s vitamin A supplementation program addresses vitamin A deficiency by funding government-run vitamin A supplementation campaigns that provide supplements to preschool-age children. Vitamin A deficiency can increase the risk of infection and lead to death in young children.

GiveWell has directed over $200 million in grants to support Helen Keller Intl’s vitamin A supplementation program since it became a Top Charity in 2017, and we continue to view it as one of the most outstanding and cost-effective programs we have found. This lookback focuses on a $7.5 million grant we made to support expansion of Helen Keller Intl’s program into five new Nigerian states from 2021 to 2024.

Here are the key takeaways and next steps from our lookback on this grant to Helen Keller Intl:

- Cost-effectiveness estimate decreased: We now estimate the program is significantly less cost-effective than initially projected—about 2 to 11 times our cost-effectiveness benchmark, varying by state, down from our initial nationwide estimate of about 24 times our benchmark. This decrease was driven by new data from Nigeria showing lower prevalence of vitamin A deficiency and higher rate of children receiving vitamin A supplementation without campaigns than we initially thought. With these updates, we think this grant has averted or will avert 450 deaths, compared to 2,200 using the assumptions we made when we funded the grant. We’re highly uncertain about these estimates though, given our high level of uncertainty about vitamin A deficiency rates in Nigeria.

- Challenges with quick exits: Based on state-specific estimates we generated in 2022 that showed cost-effectiveness below GiveWell’s funding threshold, Helen Keller Intl exited two states shortly after beginning work. These quick exits may have strained their relationships with governments, and the lack of a plan for transitioning program responsibilities back to local governments may have contributed to subsequent declines in coverage. In the future, we plan to develop a stronger understanding of grantees’ plans for exits and potential challenges, with the goal of enabling smoother transitions in the future.

- Concerns about coverage data: While coverage surveys showed high levels of vitamin A supplementation after Helen Keller’s entry, we have some concerns with their survey methodology. We have shared these concerns with Helen Keller and have seen initial indicators that they have implemented changes to address them. We plan to revisit this issue in the future.

What can we apply?

These lookbacks revealed three broader takeaways for our grantmaking, beyond these grants. We’ve already started using these findings in our recent grant decisions and are investigating some of the open questions they raised.

- Cost-effectiveness estimates can change dramatically over time. Both lookbacks revealed significant changes in cost-effectiveness, though in opposite directions. New Incentives proved to be more cost-effective than expected, while Helen Keller Intl’s program was less cost-effective. These shifts highlight the inherent uncertainty in our initial estimates and the importance of continued monitoring and reassessment.

- Location-specific analysis improves resource allocation but requires caution. There is substantial geographic variation in cost-effectiveness (e.g., we think Helen Keller’s program was 2 to 11 times our cost-effectiveness benchmark across different states in Nigeria during the grant period). While targeting funding to states where we estimate high cost-effectiveness aims to maximize impact, it relies on state-level data (e.g., on burden or costs) that we think are especially noisy. To guard against making decisions based on potentially misleading estimates, we plan to triangulate our state-level cost-effectiveness estimates against perspectives from implementing organizations, ministries of health, and other data sources.

- We need to engage more with local stakeholders. In both lookbacks, we found we’d underinvested in speaking directly with local stakeholders like government officials and subject matter experts in the regions where we fund programs. We think these conversations can help us better understand local conditions, potential implementation challenges, and other considerations that may not be captured in our models. We’ve already begun to systematically increase the number of conversations we have with local stakeholders during each grant investigation, and we have substantially increased our site visits and networking efforts in order to be more fully grounded in the experiences and perspectives of people in the communities our work touches.

What happens next?

These first lookbacks have already informed our work with these grantees and others. As we approach renewal decisions, we are being more cautious about how we respond to fluctuations in cost-effectiveness estimates based on noisy state-level data. The lookbacks have also helped us identify important research questions to prioritize, such as digging into cost reductions over time as programs scale.

Going forward, we plan to conduct lookbacks more regularly across our grant portfolio and publish what we find. Because this was our first iteration of lookbacks, we also expect to improve our approach for doing them over time.

For more detail, including our specific modeling assumptions and calculations, check out the full New Incentives lookback and Helen Keller Intl lookback.

Note on corrections to this page

We first published this page on July 9. On July 21, we discovered an error in our impact estimates in the New Incentives lookback. That prompted us to review both our New Incentives and Helen Keller lookbacks.

As a result of that review, we corrected the following mistakes in our New Incentives lookback:

- Our initial framing was misleading. In the previous version of this page, we highlighted initial and current estimates of the full $120 million we’ve spent on New Incentives. We think this was misleading because this includes funds that have not been spent yet and infants that have not been enrolled yet. As a result, this updated version focuses on the initial $17 million grant from 2021-2023. We think this provides a more firmly retrospective analysis and cleaner comparison of what we thought initially versus what we think now.2More detail about these updates is in our New Incentives lookback.

- We made a mistake in how we estimated cost per death averted in our analysis of New Incentives’ impact. We think this caused us to underestimate New Incentives’ impact on deaths that have or will be averted by roughly half. We initially said that our $120 million in funding to New Incentives has or will avert ~27,000 deaths. We now think that estimate is ~45,000.

The bottom line conclusion remains that we think substantially more deaths were averted by this grant to New Incentives than we initially projected.

We also corrected mistakes in our Helen Keller lookback to account for some similar issues. However, these did not have a meaningful effect on our overall assessment.3More detail about these updates is in our Helen Keller lookback.

Notes

| ↑1 | To date, GiveWell has used GiveDirectly’s unconditional cash transfers as a benchmark for comparing the cost-effectiveness of funding opportunities, which we describe in multiples of “cash” (more). In 2024, we reevaluated GiveDirectly’s cash program and now estimate it is three to four times more cost-effective than we’d previously estimated (more). For now, we continue to use our existing benchmark while we evaluate what benchmark we want to use going forward. |

|---|---|

| ↑2 | More detail about these updates is in our New Incentives lookback. |

| ↑3 | More detail about these updates is in our Helen Keller lookback. |

Comments

This came up on the EA Forum as well, but do you mind articulating the difference between these new “lookbacks” and other post-hoc evaluations or reflections GiveWell has done as far back as 2011?

Hi Ian,

Thanks for this question! While we’ve reviewed the performance of the organizations we’ve recommended and assessed major failures, we’ve never conducted a formal evaluation of our past grants until now.

Lookbacks ask questions of specific grants like:

– How much did the program cost relative to what we projected?

– How many people were reached relative to our projection?

– How would improvements we’ve made to our process change our estimate?

We’re excited to implement these lookbacks more regularly across our grant portfolio and use what we learn to improve our future grantmaking.

Comments are closed.