The Gates Foundation states that “funders have sharply cut their international aid to agricultural development over the past few decades.” It is implied that this is a major reason for the failure to see a “Green Revolution in Africa.”

We have been unable to locate support for this claim.

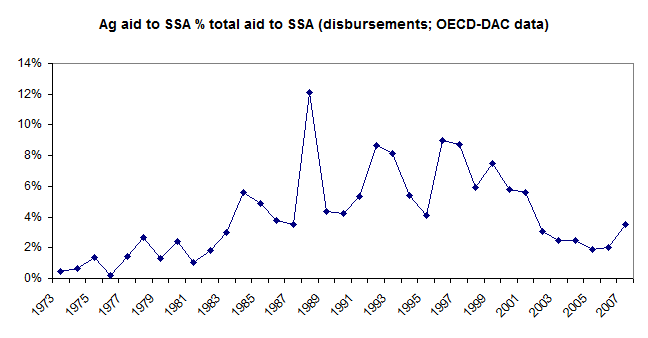

Using data from OECD – the most reliable source of official aid flows as far as we know – we graphed the proportion of (disbursed) aid to Africa that was classified in the “agriculture” sector since the 1970s. It’s possible that the numbers are artificially depressed for early years due to less standardized reporting, but we see no trend of the type the Gates Foundation describes.

It’s frustrating that the Gates Foundation doesn’t provide a source for its claim. In general, the material on its website is at a very broad level and does not make it possible for people curious about its underlying reasoning to drill deeper. That means that any criticism or examination of its work has to be either very superficial or done offline (i.e., in direct communication with the Foundation, an opportunity few people seem to get easily or often).

Added 10/30/09: here’s our source data