What would you do to make the world a better place?

Would you …

- Click a link?

- Forfeit a 69c taco that you had no practical way of getting hold of anyway?

- Type “I’m”?

- Make a wish?

- Scratch your armpit?

Of course you would. And of course you can’t.

Of course you would. And of course you can’t.

If people buy from (or look at ads for) companies based on their donations to charity, I promise the prices will rise by however much they’re donating. When sponsors give to charity for every home run the White Sox hit, they have a range in mind – and you can bet that if they end up owing way more than expected, somehow this is going to come out in next year’s pledge (even if it’s through their insurance company’s quote, etc., etc. …)

I’ve debated the specifics of various schemes along these lines before. Right now, I want to drop my usual preference for concreteness, step back from all the mechanics, and just be as general as I can be.

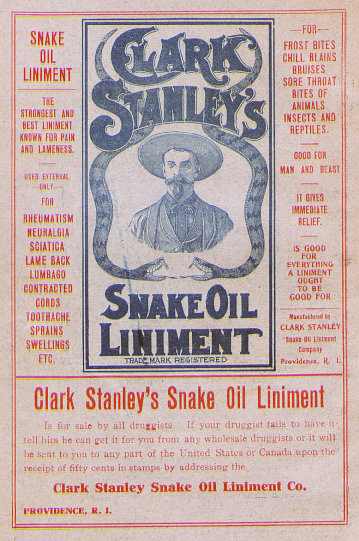

If someone tells you you can make a difference without either giving up anything valuable or doing anything useful, they are wrong.

When trying to figure out which schemes work and which don’t, it seems that’s about all you need to know.

As for the notion that these kinds of schemes “raise awareness” … man, I’m sick of hearing about “raising awareness.” If you’re not, just – read this.

I’m surprised by how many otherwise intelligent people get pulled in by promises of saving the world by yawning. Sure, they’re tempting, and it can be very complicated and confusing to figure out precisely why they don’t work … but the fundamental problem couldn’t be more obvious. Please don’t fall for this stuff. That’s all.

The big key here, to me, is randomization. Trying to make a good study out of a non-randomized sample can get very complicated and problematic indeed. But if you separate people randomly and check up on their school grades or incomes (even if you just use proxies like public assistance), you have a data set that is probably pretty clean and easy to analyze in a meaningful way. And as a charity deciding whom to serve, you’re the only one who can take this step that makes everything else so much easier.

The big key here, to me, is randomization. Trying to make a good study out of a non-randomized sample can get very complicated and problematic indeed. But if you separate people randomly and check up on their school grades or incomes (even if you just use proxies like public assistance), you have a data set that is probably pretty clean and easy to analyze in a meaningful way. And as a charity deciding whom to serve, you’re the only one who can take this step that makes everything else so much easier.

First, the moral of the story: deciding where to give is hard. Elie and I have gone through 3-4 completely different approaches, before finding one we’re pretty happy with.

First, the moral of the story: deciding where to give is hard. Elie and I have gone through 3-4 completely different approaches, before finding one we’re pretty happy with.