As we did last year, we’re going to be posting our annual self-evaluation and plan as a series of blog posts.

Our self-evaluation starts with a set of critical questions about GiveWell, intended to examine how well we’re accomplishing our high-level goals. For the most part, our high-level goals are the same as they were last year, and thus our questions for GiveWell are the same as the questions we laid out last year. However, there is one major change in how we see the goals of GiveWell: we no longer assign high importance to the number of causes covered / number of options we provide to donors. Instead, we just want to focus on finding outstanding charities that donors can be confident in, and closing those charities’ funding gaps to the extent that we can.

We see charity evaluation groups as falling into the following possible categories:

- Groups that rate as many charities as possible. Donors come to them already having a particular charity in mind to give to, and search for that charity.

- Groups that suggest charities for as many causes as possible. Donors come to them knowing what sort of cause they want to support (U.S. education, global health, etc.) but not which charity, and get a recommendation.

- Groups that simply focus on finding outstanding charities. Donors come to them looking for outstanding giving opportunities (they are often issue-agnostic).

We started GiveWell as issue-agnostic donors looking for the best giving opportunities we could find, and we have always primarily been interested in #3. We’ve never had a serious interest in #1 above – distinguishing between “worst,” “bad,” “good,” and “better” is too much of a distraction when what we care about is “best.” But for most of our history, we’ve seen ourselves as possibly being on a trajectory toward becoming #2 in addition to #3. We’ve talked about covering a broad array of causes to interest as many donors as possible, thus increasing our influence and visibility. Many of those who have criticized us have focused on the small number of causes we’ve covered, and expressed the hope that we will eventually cover many more.

A couple of things have made us rethink this goal:

1. We see it as urgent, and difficult, to find more “gold medal” charities than we have so far.

When the 2010 holiday season was approaching, and we started thinking about our strategy for outreach, we realized that we only had one charity we could personally feel really good about aggressively raising money for. While we think all our recommended charities stand well above other donors’ options, it was only VillageReach for which I felt I could sit down with someone face-to-face about and say, “Give as much as you can to this one.”

Why? Because not only does VillageReach have outstanding evidence of effectiveness; it has outstanding bang-for-the-buck (in absolute terms, not just relative terms) and most importantly, it has a concrete plan for additional funding.

We personally don’t like raising money for groups that we can’t say all these things about. We’re happy to provide our recommendations for someone looking for the best microfinance charity, but we can’t honestly say that we think dollars given to it are accomplishing as much good as possible.

VillageReach had a “stretch” fundraising target for the year of $1.5 million. That gap has now been more than closed. VillageReach needs a total of $4.4 million for its multi-year project, so we are happy to leave it as our top-rated charity for now, but if our growth continues, the entire expansion could be funded by the end of the year. Thus, we are starting to get dangerously close to the point where the number of dollars we influence exceeds the number of dollars we know how to allocate very effectively.

We see this as an urgent situation. It would be a major problem for GiveWell if we essentially had more demand for our research (i.e., donors interested in following our recommendations) than supply (i.e., charities able to absorb this funding effectively). Thus, one of our top priorities for 2011 is finding more “gold medal” charities that we can give a wholehearted recommendation. We feel it would be a major distraction – and mistake – to try to find the “best of the bunch” within causes where there are no groups that really shine by our criteria.

2. The benefits of covering extra causes don’t seem very large.

The argument we’d always used for covering more causes was that covering more causes means appealing to more donors. In theory, this is true, but for the specific audience we seem to be attracting, the value of breadth seems surprisingly low.

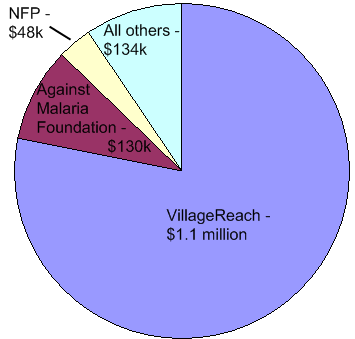

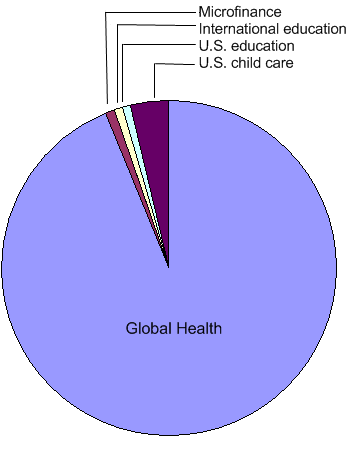

The following charts show the percentage of our 2010 “money moved” (donations given to our recommended charities as a result of our research, which we track in a variety of ways) to different categories of charity. A future post will go into more detail on how we track “money moved” and what the figures were for 2010.

Bottom line – it looks like the overwhelming majority of our donors follow our recommendations regarding the promising cause and the most promising organization.

Even our top-rated U.S. charity didn’t attract nearly as much funding from our donors as our global health charities did. Meanwhile, the work we’ve put into accommodating donors interested in narrower causes – education and microfinance – has barely registered in terms of increased “money moved.”

And education and microfinance are still pretty broad, popular causes. I’d expect a “cancer research” or “global warming” recommendation to be pretty comparable to education and microfinance in terms of new money moved; I’d expect a narrower cause like “homelessness in New York City” to bring much less.

This doesn’t mean that all donors are issue-agnostic or global health fans. It means that our audience is. And we need to focus on serving our audience as well as possible.

Implications

We aren’t dropping the idea of “offering recommendations within lots of causes” entirely. We aren’t completely sure why our audience is the way it is, and recognize that over time the situation may change. Thus,

- We plan to maintain/update our existing research on all causes, including the causes above plus disaster relief (the newest addition).

- We plan to investigate more causes for the purpose of finding more outstanding charities. We will be writing up as much as we can of what we learn in these investigations, which may (along with our do-it-yourself evaluation guide) be helpful to donors interested in particular causes.

- We may provide donors a way to “lobby us” to cover more causes. I’ve thought about the idea of offering donors the chance to commit to give $X to our top-rated charity in a given cause, conditional on our covering that cause; if we got enough commitments for a given cause we would cover it.

However, we no longer consider “number of causes covered” a relevant metric for GiveWell and will be replacing it with “room for money moved,” i.e., the total amount of room for more funding of our very top-rated charities.

Some may be disappointed by this decision, seeing it as a retreat from the opportunity to be a resource for as many people as possible. But we’re not sure how much sense it really makes for an operation like ours to maximize its breadth. Giving decisions are deeply personal, and the kind of work we do is as well. We’ve mostly followed our personal values in making recommendations, and we’re attracting an audience that seems comfortable with these values.

This doesn’t mean that there’s no place for a GiveWell-type operation that focuses on (for example) U.S. causes; but perhaps we aren’t the people to run that operation. Perhaps it takes someone who truly believes that U.S. charities represent the best chance to help people (we do not) to do compelling research and attract the right audience for that cause. We’d be more than happy to see such a group spring up. For our part, we’re happy to remain a niche operation for a niche audience, as long as the niche is big enough. And it appears to be growing.