This is the third post (of five) we’re planning to make focused on our self-evaluation and future plans.

This post answers a set of critical questions for GiveWell stakeholders. The questions are taken from our 2010 list of questions. For each question, we discuss

- Where we stood as of our previous self-evaluation and plan a year ago.

- Progress since then.

- Where we stand (compared to where we eventually hope to be).

- What we can do to improve from here.

Is GiveWell’s research process “robust,” i.e., can it be continued and maintained without relying on the co-Founders?

Where we stood as of Feb 2010

We had one full-time employee other than the co-Founders (Natalie Stone). We felt that she was capable of maintaining our international aid report with little oversight, while covering other causes would require heavy involvement from co-Founders.

Progress since Feb 2010

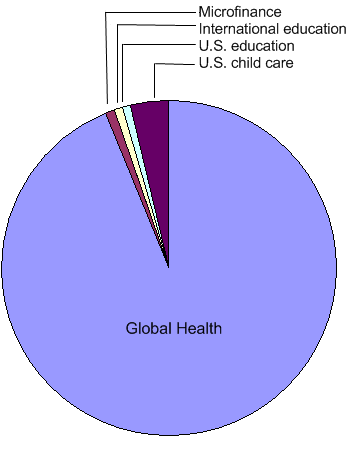

- Natalie Stone has now been with GiveWell for about 18 months. She has not only worked on maintaining and expanding the international aid report, but also did the bulk of the work for a new cause – microfinance – and has also taken on miscellaneous duties such as responding to emails sent to info@givewell.org

- We have also made another full-time hire, Simon Knutsson, who started as a volunteer, became a 30-40-hour-per-week contractor in March of 2010 and joined as a full-time employee in January 2011. He has done the bulk of the work for the U.S. Equality of Opportunity report.

- We have systemized the process of keeping our research up to date. This process can now be done partly by junior staff and partly by volunteers, with very little participation needed from Co-Founders.

- Because we now have useful work for volunteers to do, we’re able to use volunteer work as a way to evaluate potential hires, leading to two more new hires:

- We hired one volunteer (Stephanie Wykstra) part-time; she has been working about 20 hours per week for us and plans on working full-time for us over the summer. She did the bulk of the work for our disaster relief report.

- Another former volunteer (Alexander Berger) plans on coming to work full-time for us after he graduates college.

All in all, by this fall we expect to have 3.5 employees apart from the co-Founders. All will focus primarily on maintaining and expanding our existing research (and in particular, finding and evaluating particularly promising charities), a top priority for us, but all will experiment with and be available for other work as well, including work on researching new causes.

Where we stand

We have a promising set of junior staff and lots of valuable work for them to do, keeping our research up to date and thorough. These staff have promise as people who might eventually be able to run the organization entirely, but we don’t feel they’re at that point yet.

What we can do to improve

We plan on continuing to expand the scope of the work we assign to junior staff, as well as continuing to work with volunteers who may eventually become staff. This will take a good deal of time in terms of training/management overhead.

Does GiveWell present its research in a way that is likely to be persuasive and impactful (i.e., is GiveWell succeeding at “packaging” its research)?

Where we stood as of Feb 2010

We felt that our research was presented with sufficient clarity and good organization, though without emotional persuasiveness. We sought to add more external evidence of credibility and to better integrate the blog and website.

Progress since Feb 2010

As discussed in the previous post, we have added some external evidence of credibility to our website, and established a basic process for adding more. We have not made other progress on this metric; we do not consider improving the emotional persuasiveness of our work to be a priority.

Where we stand

We’re currently satisfied with the presentation of our content and don’t plan on emphasizing this goal in the near future.

What we can do to improve

At some point, more attention to the presentation of our research may be useful for broadening our audience. Right now, though, our impact appears to be growing rapidly just from our niche audience, and continuing to serve this audience well presents major challenges that are higher priorities.

Does GiveWell reach a lot of potential customers (i.e., is GiveWell succeeding at “marketing” its research)?

Where we stood as of Feb 2010; progress since Feb 2010; where we stand

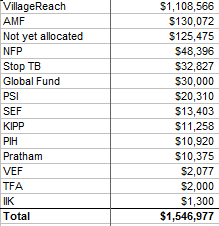

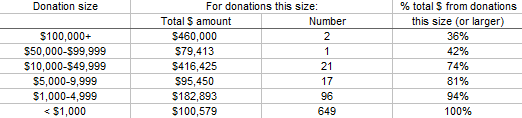

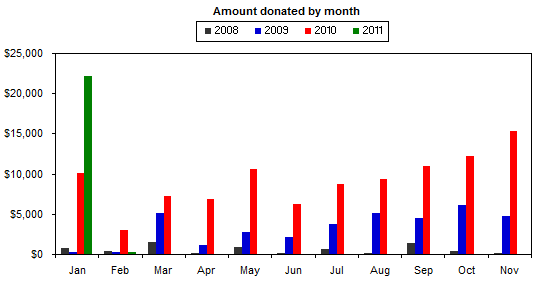

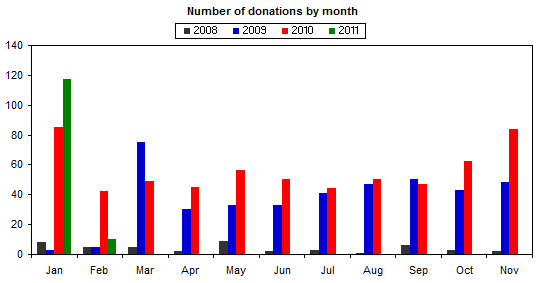

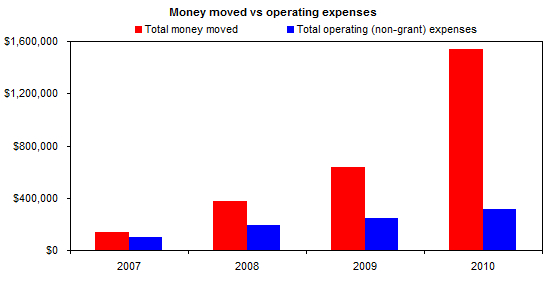

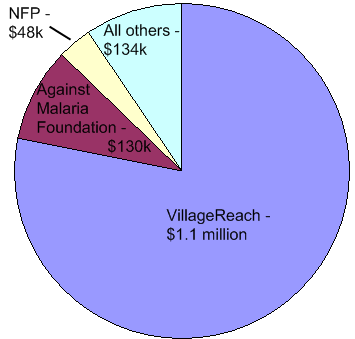

- We tracked over $1.5 million in donations to top charities in 2010, compared to just over $1 million in 2009.

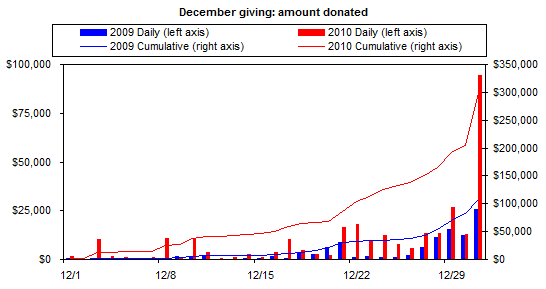

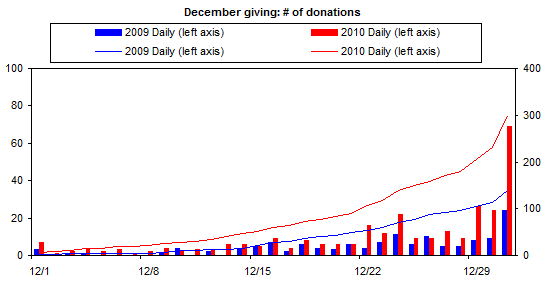

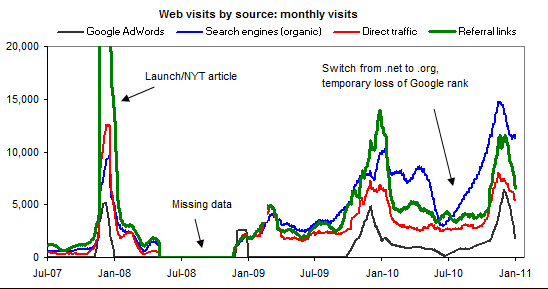

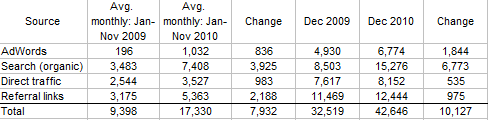

- Our website traffic nearly doubled from 2009 to 2010, and donations through the website nearly tripled. Our overall increase in money moved appears to be driven mostly by (a) a gain of $200,000 in six-figure donations; (b) new donors, largely acquired via search engine traffic and the outreach of Peter Singer.

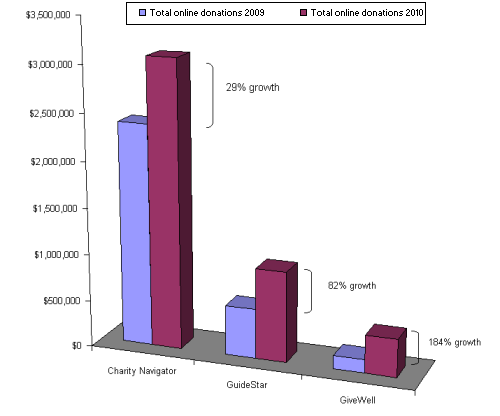

- Our growth in online donations to recommended charities was significantly faster than that of the more established online donor resources (Charity Navigator and GuideStar); our total online donations remain lower than these resources’, but are now in the same ballpark.

We have also formed a content partnership with GuideStar.

The amount of money we’re influencing is now quite significant to our top charities. As discussed previously, we now have significant concerns about “room for more money moved.”

Ultimately, we have a loose goal of reaching the point where our “money moved” is equal to, at a minimum, 9x our operating expenses. We see our operating expenses as being between $400,000-$500,000 in a steady state (details forthcoming) so this would require seeing another 150-200% growth in our money moved.

What we can do to improve

We have many ideas to continue to improve our reach. At this point we feel the most promising are:

- Have more discussions with, and surveys of, those who use our research to decide where to give, in order to understand our audience, deepen our relationships, and ultimately find more such donors. A professional fundraiser could help significantly with this.

- Hold fundraising events in order to make it easier for our existing supporters to bring in new supporters. We held a small event in December of 2010 focusing on VillageReach; the cost of the event was about $3,000 and it resulted in a total of $17,800 in donations to VillageReach and $7,500 in donations to GiveWell.

- Public relations / press targeting projects aiming for earned media.

- More active pursuit of people we consider highly influential among our target audience (the better we understand our audience, the better our ability to seek out such people).

- Website improvements, including

- Improving our search engine optimization. (Most of our gains in traffic in 2010 came via search.)

- Minimizing the processing fees on donations.

- Better encouraging donors to share their actions and recommendations with friends (using email and social media).

- Offering donors an easy way to get reminded to donate in December. (In 2010 over 25% of the money donated through our website was on the very last day of the year.)

- Improve our ability to track website users and understand how they found us.

Is GiveWell a healthy organization with an active Board, staff in appropriate roles, appropriate policies and procedures, etc.?

Where we stood as of Feb 2010

We were happy with our Board and other aspects of our organization.

Progress since Feb 2010

We remain happy with our Board and policies and procedures. However, we now have a significant need to raise more operating funds. In a nutshell,

- Our improved ability to recruit and employ junior staff has resulted in increased expenses, both present and future.

- We have lost two major sources of revenue (a major donor changed careers and the Hewlett Foundation lowered the size of its support).

Details will be forthcoming in a financial analysis document.

Where we stand

We have a significant need for more operating support and intend to make this a major priority for 2011.

What we can do to improve

We aim to raise operating support by

- Applying for support from major institutional funders.

- Having discussions with people who have a substantial history of being close to our project, including Board members and major “customer donors” (i.e., donors who have used our research to decide where to give), about the possibility of their supporting GiveWell directly.

We plan to continue to avoid soliciting funds from the public at large. We wish to avoid “competing with” our recommended charities for funding, and feel our credibility would be hindered if we were asking for money ourselves.

What is GiveWell’s overall impact, particularly in terms of donations influenced? Does it justify the expense of running GiveWell?

Where we stood as of Feb 2010

In 2009, we tracked just over $1 million in donations to our top charities, though over 30% of this $1 million came from pledges and restricted donations that had been made in 2008. We had a reasonably strong presence in our sector as members of the Alliance for Effective Social Investing and GuideStar Exchange Advisory Board.

Progress since Feb 2010

As discussed above, our website traffic and money moved have grown significantly and we now provide significant funding to our top-rated organizations.

We remain members of the Alliance for Effective Social Investing and GuideStar Exchange Advisory Board; we have also joined two other collaborative groups in our sector (Markets for Good and Market Development Working Group of the Social Impact Exchange) and begun a content partnership with GuideStar.

Where we stand

At this point we can point to significant impact for our top charities; the impact they, in turn, have with our additional funds remains to be seen, but we are well-positioned to evaluate it over time.

That said, our “money moved” is still not at the point we would eventually like to see, and we still do not know enough about our impact because we have little information on where donors would have given without our research. We are currently investigating the latter.

What we can do to improve

- Survey donors on where they would have given if not for our research, to get a better sense of our overall impact. (Currently in progress.)

- Pursue the previously outlined strategies for increasing both our “money moved” and “room for more money moved” (both are necessary).

- Pursue long-term sustainability of the organization – in particular, continue developing junior staff and raise the operating funding needed to maintain these staff.